M.N.: Were those "Russian-funded projects" really funded by Germany and/or New Abwehr? This data could be accessed from anywhere, not only from Russia: “I think that there is a genuine risk that this data has been accessed by quite a few people and it could be stored in various parts of the world, including Russia..." - 6:49 PM 1/11/2019

- Get link

- X

- Other Apps

M.N.: Were those "Russian-funded projects" really funded by Germany and/or New Abwehr? This data could be accessed from anywhere, not only from Russia:

“I think that there is a genuine risk that this data has been accessed by quite a few people and it could be stored in various parts of the world, including Russia, given the fact that the professor who was managing the data harvesting process was going back and forth between the U.K. and Russia at the same time that he was working for Russian-funded projects on psychological profiling,” Wylie told NBC’s Chuck Todd during a “Meet the Press” segment.

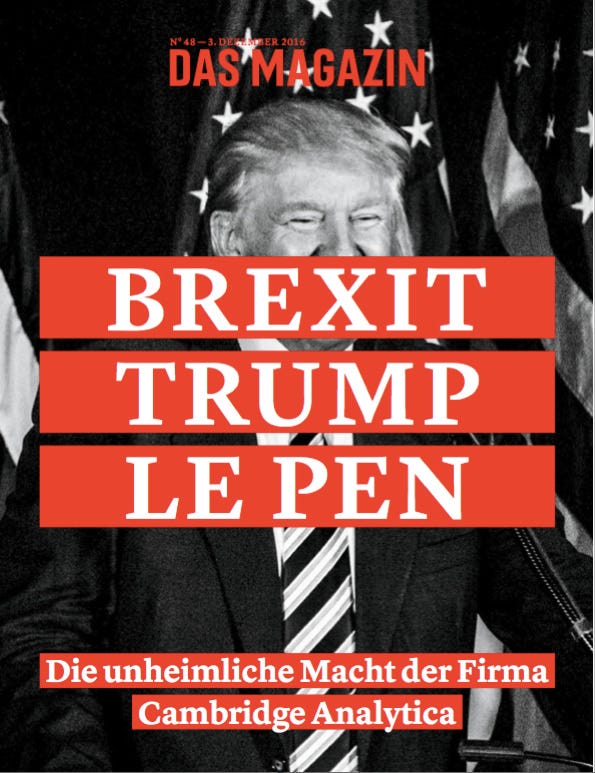

Signs for company Cambridge Analytica in the lobby of the building in which they are based on March 21, 2018 in London, England.

Getty Images

The number of Facebook users affected by the recent data sharing scandal could exceed 87 million and records could be stored in Russia, Cambridge Analytica whistleblower Christopher Wylie said on Sunday.

Wylie said that Aleksandr Kogan, whose quiz app harvested the data of tens of millions of Facebook users, could have allowed that data to be stored in Russia. An organization run by Kogan, called Global Science Research (GSR), shared the data with controversial political data analytics firm Cambridge Analytica without their permission.

“I think that there is a genuine risk that this data has been accessed by quite a few people and it could be stored in various parts of the world, including Russia, given the fact that the professor who was managing the data harvesting process was going back and forth between the U.K. and Russia at the same time that he was working for Russian-funded projects on psychological profiling,” Wylie told NBC’s Chuck Todd during a “Meet the Press” segment.

“I couldn’t tell you how many people had access to it, that’s a question better answered by Cambridge Analytica, but I can say that various people had access to it.”

Facebook and Cambridge Analytica were not immediately available for comment when contacted by CNBC. CNBC also contacted Kogan’s Cambridge University email address, but the academic was not available at the time of publication.

Wylie believes the total number of Facebook users whose data was shared could be even more than the 87 million admitted by Facebook last week. Initial reports by the Observer and New York Times newspapers put the figure at 50 million. Cambridge Analytica has said that it licensed no more than 30 million Facebook users from GSR.

You can watch the full NBC interview here.

WATCH: Here’s how to download a copy of everything Facebook knows about you

VIDEO01:00

Here’s how to download a copy of everything Facebook knows about you

Read the whole story

· ·

Facebook data gathered by Cambridge Analytica (CA) was accessed from Russia, an MP has said.

Damian Collins, who is leading a parliamentary inquiry into fake news, told CNN that the Information Commissioner's Office (ICO) had found evidence that files were accessed from Russia and other countries.

He said: "I think what we want to know now is who were those people and what access did they have, and were they actually able to take some of that data themselves and use it for whatever things they wanted."

He added: "So is it possible, indirectly, that the Russians learned from Cambridge Analytica, and used that knowledge to run ads in America during the presidential election as well."

CA folded as a company earlier this year, shortly after being suspended by Facebook amid allegations it amassed data on millions of voters from their profiles and misused it.

We know our data has been left vulnerable, or sold, or misused. But do we really understand what that means for our safety and security online — and when it actually matters?

Posted on January 10, 2019, at 4:44 p.m. ET

Over the holidays, PopSugar’s Twinning app experienced a sudden viral resurgence. The app, which uses facial recognition to tell you what celebrities you resemble, dominated Facebook, Twitter, and Instagram. Bored, housebound vacationers took untold numbers of selfies, uploaded them to the app, and posted the results for all to see.

Then, on New Year’s Eve, they learned those selfies were stored unprotected on Twinning’s servers and were “easily downloadable by anyone who knew where to look.”

The revelation immediately sparked other concerns about the app, namely its terms of service agreement, which granted PopSugar sweeping permissions over submitted images. As one business law site noted, “They can use the image to create new content unrelated to #twinning. They are — based on the language in the terms — likely even entitled to sell your images.”

Twinning’s privacy debacle was another in a seemingly endless string of such incidents arising from the opaque and sloppily Byzantine policies and practices that govern our digital information. In the final months of 2018, we learned about massive data security failures at companies from Facebook to British Airways. T-Mobile hacks exposed user info; the app Timehop compromised 21 million phone numbers and email addresses; glitches and breaches exposed the private information of 52.5 million Google+ users; and a cool 500 million Marriott Starwood hotel guests’ data was exposed in a four-year-long database leak. The most unsettling reports in the latter involved the ways our data moves around the internet without our explicit knowledge. In late December, a New York Times report detailed Facebook’s data-sharing partnerships, which included deals with several massive foreign companies that allowed for the sharing of users’ contact lists and address books. One of the most shocking claims revolved around Facebook partner contracts that allegedly allowed Netflix and Spotify to “read, write, and delete users’ private messages.”

This raucous parade of privacy missteps has stoked a growing collective outrage about tech companies playing fast and loose with personal information we have assumed they would properly secure and protect from misuse. There have been Senate hearings, Twitter protests, angry defections, pointed screeds. We’re mad and getting madder.

Certainly, we're aware that some companies are bed-shittingly poor stewards of our personal information.

But are we sure we know why? Certainly, we're aware that some companies are bed-shittingly poor stewards of our personal information, that our data has been left vulnerable, or sold, or misused. But do we really understand what that means for our safety and security online — and when it actually matters?

There are clearly times when we don't, when any effort to understand what actually happened to our personal data is subsumed by knee-jerk outrage — or apathy.

Take that New York Times Facebook privacy exposé. A number of the partnerships reported by the paper — data-sharing alliances with Chinese and Russian companies — seem to confirm long-held beliefs that Facebook’s obsession with growth and scale have indeed come at the expense of user privacy. But other outrage-inspiring details were oversold. The Times said Netflix and Spotify were allowed to read and write private user messages. But as Slate’s Will Oremus noted, this access wasn’t particularly untoward. It was “about allowing Facebook users to read, write, and delete their own Facebook messages from within Netflix and Spotify once they linked their accounts and logged in.” In other words, it wasn’t blanket, do-whatever-the-hell-you-want access to private information. Similarly, the permissions listed in the Twinning app’s terms of service, though they might seem excessive and invasive (and perhaps are), are actually fairly common. Take a look at Instagram’s.

It’s this kind of nuance that’s so often lost in the fallout from privacy breaches. That’s understandable, because this stuff is frustrating and confusing, top to bottom. Terms of service agreements are ludicrous rat’s nests of legalese and business and engineering terminology. The companies that create them are vast and complex, their inner workings full of dizzyingly complex trade secrets. And their businesses are changing in ways that can quickly turn a blanket liability protection for a technology for which we imagined only a few possible and largely reasonable uses into a blanket protection for a technology with unsettling new uses that we never imagined.

And even if we did read the fine print on the services we use, many of the most troubling overreaches happen largely out of sight. In December, the German mobile security initiative Mobilsicher released a report detailing how Android apps like Tinder, Grindr, and others are quietly transmitting sensitive data about people’s religious affiliation, dating preferences, and health to Facebook through a complex process of linking and cross-referencing advertiser IDs from mobile devices. The report notes that while Facebook didn’t conceal this practice, most app developers weren’t aware of it, likely because they didn’t scrutinize Facebook’s software development kit terms of service as closely as they should have. Even more confusing? Facebook views this sort of data collection as an industry-standard practice, not an incursion on privacy.

The end result of this broader privacy reckoning is a bizarre, low-grade feeling of anxiety about the safety of our digital lives, but without any concrete way to see the larger picture. Reported data abuses that sound scandalous — like Spotify and Netflix’s message-reading partnership — may very well not be; meanwhile, mundane-sounding decisions like granting an academic researcher API access for a Facebook quiz app can result in a global political data scandal like Cambridge Analytica.

We’re increasingly certain that our information is being mined and exploited, but it’s not always clear to what extent. Or how bad the consequences might be. The effect of this realization is somewhat paralyzing. A recent Verge piece described this feeling as it relates to online ads, arguing that people “hate the poorly-targeted ads that show them products they’ve already bought, and they fear the hyper-targeted ads showing them things the machine has deduced they might buy in the future.” But it’s not just ads — we’re constantly trying to figure out just exactly how fucked our digital selves really are. Facebook users were rightly furious that raw data from up to 87 million Facebook profiles was harvested and eventually sold to Cambridge Analytica. But the actual efficacy of Cambridge Analytica’s fabled psychographic profiling is up for debate. Cambridge Analytica was selling its services as a kind of 21st-century, super-effective persuasion marketing, but some scientists and researchers have dismissed those tactics as modern-day snake oil.

More than a decade into the platform-era internet, it’s still unclear exactly what our data is really doing out there.

Which is only to say that more than a decade into the platform-era internet, it’s still unclear exactly what our data is really doing out there. We know it’s used to target us with advertisements and create comprehensive profiles that do everything from creating personalized music playlists to suggesting predictive text when we’re typing emails. We know, from requesting our profiles from Google and Facebook, that the granularity and volume of information is dizzying. We know that detailed, GPS-rendered location histories track users’ movements by the minute. We know that search histories stored across devices catalog everything from mundane queries to intensely private obsessions, anxieties, and moods. We know that targeted advertising profiles can reveal gender, age, and hobbies, as well as things like career status, interests, relationship status, and income. We know that it would be unsettling and even horrifying for all this data to fall into the wrong hands. But whose are the wrong hands? Whose are the right ones?

Tech companies and app creators are the beneficiaries of this collective confusion. Yes, they take a lot of shit (rightfully so) when they stumble, but the uncertainty around the difference between a malignant privacy breach and a more benign one can provide cover for egregious lapses and oversights. Opaque algorithms and operations allow executives to dismiss the concerns of journalists and activists as unfounded or ignorant. They argue that critics are casting normal, industry-standard practices and terms of service agreements as malicious.

What does it say about us or the culture built atop the modern internet that Byzantine terms of service agreements that few understand or even bother reading govern so much of our lives online? As one privacy scholar put it to me years ago, “We’re increasingly dominated by legal agreements we don’t have any chance to negotiate.”

Connection is disclosure. The social graph is disclosure. Geolocation is disclosure. Convenience, too, is disclosure.

And beyond this, such minutiae obscure the larger, more urgent problem: The internet is in many ways powered by the exploitation of our data. So much of the language of the big technology platforms is the rhetoric of connection. (Facebook’s original mission statement included the mandate, “Make the world more open and connected.”) But connection is also disclosure. That disclosure can be explicit (building one of Facebook’s social graphs means voluntarily telling the network who your friends and family are and how you relate to them) but also occasionally unexpected (merely turning on your phone turns you into a human beacon, readily accessible by bounty hunters). Geolocation is disclosure. Convenience, too, is disclosure, fueled by an invasive advertising industry that views its customers as something to be targeted.

The real scandal isn’t a semantic quibbling over whether a specific terms of service agreement or partnership program is exploitative — it’s that such agreements are the norm. Handing over at least some of your personal data is table stakes for participation in today’s internet. That transaction appears simple, but it’s extraordinarily complex, particularly given the pace of innovation and how quickly it can change what can be done with our data. This is why conversations about data privacy are so difficult, and so often lead to outrage and criticism, but rarely to action. The great privacy reckoning is here, but the question remains: How are we supposed to have a meaningful, impactful conversation about this stuff when we clearly don’t understand it?

Read the whole story

· · · · · · ·

Next Page of Stories

Loading...

Page 2

- Get link

- X

- Other Apps

/cdn.vox-cdn.com/uploads/chorus_image/image/57135749/607814904.0.jpg)

Comments

Post a Comment